Ninghai militia rushed to the disaster area near the sea to help the trapped people.

Armed police officers and men actively participate in anti-Taiwan disaster relief.

Armed police soldiers take a nap in the gap between anti-Taiwan

The wind roared and the rain poured down, and the disaster was urgent!

In the early morning of August 10th, the super typhoon "Lichima" landed, wreathed in wind and rain and ravaged the land of Yongcheng. The sudden danger still caught everyone off guard. However, in the storm, there are always some figures standing against the wind in the front line, which makes us moved; There are always some warm moments in the disaster, which makes people moved.

At the first time of the disaster, the officers and men stationed in Ningbo actively went to the front line of anti-Taiwan disaster relief. A touch of "camouflage" in the torrent reflects the sincere feelings of the soldiers who are dedicated to the people; The praise of the people embodies the deep feelings of the soldiers and civilians in the same boat. As the netizen said: "There is an army called China Army! There is a kind of soldier, called China soldier! The front line of the charge, the forefront of anti-Taiwan disaster relief! A suit of armor, a lifetime! "

Ningbo detachment of the Armed Police Force: "Fight to the death" does not retreat, and wherever it is most dangerous, it will rush.

That night, after the storm, what was left to Soochow Town in Yinzhou was a mess.

The super typhoon "Lichima" was so fierce that it caused a rare flash flood. The deepest water in Tiantong Village was 1.5 meters, and most houses were flooded. There are many landslides on Baozhan Line, Baodian and Hantian Highway; A small-scale mudslide occurred in Houshan, tiantong temple, and the whole temple was flooded, and more than 300 people were trapped … …

In times of crisis, where the masses need help, the soldiers will appear there! During the rainstorm, after the mobilization, at 7 o’clock in the morning, the officers and men of the Ningbo detachment of the Armed Police went to the "front line" with high morale. In the news, the serious disaster in Dongwu Town keeps coming — — Serious water accumulation, many people trapped; The road was blocked and it was difficult for rescuers to get in … … This makes all the officers and men of the armed police feel sad, and they can’t wait to plug in their wings and fly into the disaster area for the first time.

When they arrived at the scene, what they saw with their own eyes was more impactful, but it also strengthened their determination to rescue and rescue the disaster at all costs. The rain is increasing, the mountain torrents are fierce, and the road to the Millennium temple is blocked. If you move forward in the water more than one meter deep, you will be washed away if you are not careful. On the other hand, the temple was cut off from water and electricity and became an island in the flood. What should we do? At the critical moment, it was the soldiers who bravely stepped forward and walked forward, "opening the way" every mountain and "bridging the bridge" when encountering water. The officers and men opened a simple "life passage" through the rope, and then climbed the "bridge" one by one, and finally successfully entered the Tianwangdian Square for personnel transfer.

With so many people moving, it is obviously not an easy job under such complicated circumstances. In the storm, all the houses on both sides of the temple were destroyed, and the officers and men pulled into a "human wall" to escort the people down the mountain. Uncle Zhang, a tourist, sprained his ankle and was inconvenient to move. Xie Hongjiu, the squad leader of the mobile squadron, picked him up without saying anything, wading through the mountain torrents over his knees, and it was a 4-kilometer mountain road … … "When we saw the green military uniform, we saw the savior." After being rescued, Uncle Zhang said excitedly.

Heroes are often born inadvertently! Wang Tian, corporal of the mobile squadron, could have bid farewell to the military camp and retired gloriously in another half month. In the eyes of others, suffering from lumbar disc herniation, he can completely avoid rushing to the "front line" and stay in the camp with peace of mind. But surprisingly, he took the initiative to find the captain and volunteered for the last time. In his own words: "It is the best gift from the army before I retired to have the opportunity to fight side by side with my comrades again!" In the end, he entered the rescue team as he wished, and together with his comrades, he scraped gravel by hand, broke wood on his shoulders, dredged with a shovel, and trudged on foot with rescue tools … …

There is also Bi Chengqi, the chief of the Propaganda and Security Unit. His home and two acres of fruit and vegetable fields were all flooded, and his pregnant wife suffered from pneumonia. But he voluntarily gave up his vacation and returned to his post, and went to tiantong temple with the first rescue force. Xu Xu, the squadron leader of the mobile squadron, has always adhered to the first-line rescue and disaster relief in the case of physical illness and mobility inconvenience. On the first day, he took people to stay in the temple for vigilance inspection. On the second day, he followed the big army to carry out cleaning and rescue tasks, and fought for more than 30 hours until the end of the task.

At the same time, in Lvjian Village, Beilun District, 20 kilometers away, the afterglow of "Lichima" is raging, and the deepest part of the accumulated water is 1.5 meters. Look at Wang Yang. The officers and men went out again and rushed into the rescue work. What few people know is that Jin Chenjiang, a soldier from Taizhou Linhai, was among those who participated in the rescue. When he went to the front line, his heart was always hanging: "The communication was broken, how was the family, and how were his parents?" But he knows what is important, and he also knows that his brother units will do their best to help his family like them. After a busy wave, the squadron leader has good news to inform him — — His parents have been contacted, his family is safe, and the other party also told him to concentrate on saving people!

Clearing silt, moving garbage and building homes … … 215 officers and men fought for 45 hours in six mission areas, carried more than 140 tons of materials, cleared more than 2,000 cubic meters of garbage and earth and stone, eliminated 24 dangerous places and disinfected 14,000 square meters. After the rescue, the officers and men who fought continuously left in a hurry at the urging of new tasks. In the wind and rain, it is they who stick to the front line, which makes this typhoon day more stable and moving.

Ningbo military sub-division: rushing to the sea, "Yongcheng power" warms his heart.

The super typhoon "Lichima" struck, and the disaster was not only in Yongcheng, but also in the coastal area of Taizhou. The whole city was besieged by floods and was in a comprehensive emergency! Linhai sent a cry for help to all walks of life, and Ningbo people who were not far apart were also worried about it. Many rescue teams in Yongcheng took the rain in the middle of the night with full love, and the "Yongcheng Power" shone here.

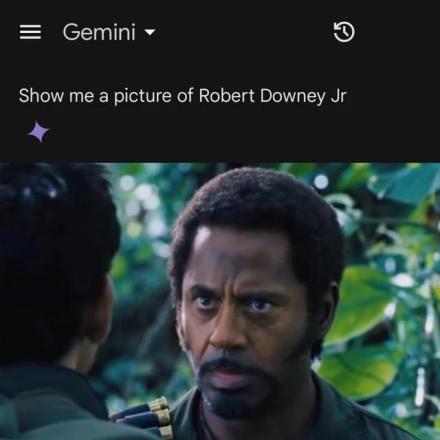

At 6: 45 pm on the 10th, the People’s Armed Forces Department of Ninghai County quickly assembled the militia emergency detachment, and the first one braved the pouring rain to maneuver to the sea. At the same time, the Yuyao People’s Armed Forces Department also received a reinforcement order. At 9 o’clock that night, 25 Yuyao militiamen went to the "battlefield". However, the situation has changed, the traffic of reinforcements from other directions has been blocked, and the disaster in the coastal area has not been delayed, so the city urgently organized the second batch of reinforcements. Subsequently, the militia emergency detachments in Cixi, Beilun and Jiangbei also assembled urgently and went to Linhai to participate in the rescue.

Disaster is command, time is life! At 10: 30 that night, the first Ningbo reinforcement force — — Ninghai militia emergency detachment arrived in Linhai with 6 assault boats, 4 rubber boats and other important materials. At 0: 55 the next morning, Yuyao militia also arrived at their destination. Subsequent support forces from all walks of life in Yongcheng continued to come, and "Yongcheng Power" appeared in Linhai.

Help! Help! Help! After the arrival of the militia in our city, they quickly plunged into intense rescue operations. They ran around all night, didn’t sleep all night, but they were still in high spirits. More than 130 militiamen from Ningbo, driving 33 assault boats and 7 rubber boats, entered the old town of Linhai along the previously discovered "fast track" to carry out comprehensive rescue.

Yuan Zhenfeng, a member of Yuyao militia emergency detachment born in 1993, is a retired soldier. After attending the local anti-Taiwan disaster relief, he rushed to the sea late at night and stayed on the front line for two days and two nights. What is also little known is that on the morning of the 10th, he had a gastritis attack, so he couldn’t stay up. He went to have an intravenous drip, and later he pulled out the intravenous drip and went to the rescue line.

He used his circle of friends to record the rescue in Linhai: "I rushed to Linhai overnight yesterday (10th) and entered the disaster area this morning. As soon as I entered the mobile phone, there was no signal and the news could not be sent. When we entered, the disaster had eased and the water level dropped rapidly. At that time, when I received the order, the disaster was the most serious. The water was very big and urgent, so I only brought the assault boat. As a result, when we reached the disaster area, the water level dropped obviously, so many places had to push it, and let the people sit in the assault boat, so we would be trackers and full ‘ The love of a tracker ’ 。” "What impressed me most in the disaster area was all kinds of rescue teams and all kinds of condolence goods transportation teams. By last evening, the people who came to express their condolences to the seaside were one after another, mineral water, bread, eggs, sushi, pizza … … Another old man simply carried an altar of Yangmei wine. However, we didn’t eat, and the discipline should not be bad, but our hearts were still warm. I saw with my own eyes that one party was in trouble and all parties supported it. "

By the evening of the 11th, the first militia emergency detachment in our city rushed to the seaside to complete the task and returned to the station one after another. The militia emergency detachment of five districts, counties (cities) dispatched a total of 272 boats, rescued 1,064 people trapped, and transported 10.6 tons of materials.

However, the difficulty of dredging after the disaster near the sea exceeded everyone’s expectation. Then, new reinforcements began again. At 4 o’clock in the morning on the 15th, when it was still dark outside, the militia training base in Ninghai County was already brightly lit, assembled, loaded and lined up, and the figures dressed in camouflage uniforms were particularly tall under the light mapping. At about 5: 30, when the materials were loaded, 150 Ninghai militiamen "went out" again … …

Navy in the Eastern Theater: Fighting against the "King of the Wind" and Reappearing the Affection between the Army and the People.

In the face of the fierce wind king "Lichima", the naval forces stationed in Ningbo in the Eastern Theater started the emergency response mechanism for the first time. The officers and men who undertook the task of emergency rescue and disaster relief were United as one, and rushed to the disaster area to organize evacuation and carry out emergency rescue and disaster relief, so as to minimize the losses caused by the typhoon to people’s lives and property in our city.

On the evening of the 9th, strong wind mixed with heavy rain began to sweep through Yongcheng, and the water level of Shiyan Reservoir in Fenghua soared, reaching the warning line of more than 700,000 cubic meters of storage capacity. The danger was urgent. The officers and men of a certain naval unit stationed in Fenghua immediately stepped up inspections and closely observed the water level. At the same time, they urgently dispatched evacuation teams to assist the resident village committees to check the terrain from house to house, eliminate the danger and evacuate the people to a safe place.

The next day, the most difficult test came. There are also disasters in five townships in Yinzhou, with serious water accumulation on the road surface and some people trapped. On the morning of the same day, after receiving the request for assistance from the local government, an electronic countermeasure brigade of the navy in the Eastern Theater immediately dispatched two anti-Taiwan disaster relief detachments and rushed to Tangjiawan and Shayan Village to transfer the affected people, successfully transferring more than 200 people to the resettlement sites.

Such rescues were numerous in those days! A naval regiment was also hit by a typhoon, and heavy rain caused landslides, floods and other disasters, causing local flooding, traffic jams and farmland damage … … After the disaster occurred, the regiment immediately launched a rescue and disaster relief plan, and organized more than 30 officers and men of the emergency rescue team to go to the front line of flood control and disaster relief. After six hours of continuous fighting, the officers and men in charge of the task reinforced the buffer slope with sacks filled with stones, cleared the sludge to restore the smooth road, transferred and resettled 45 affected people, and provided them with medical services such as wound dressing.

There is also a resident of a naval aviation division in the eastern theater, and the same people are trapped. After learning of the danger, the Ministry immediately launched a rescue and disaster relief plan, and organized an emergency detachment to carry out rescue and disaster relief at the first time. In the morning, we helped to evacuate more than 120 households and 50 apartments in the airport, and arranged for 16 people to stay in the auditorium of the camp.

As of 7: 00 a.m. on the 11th, the naval forces stationed in Ningbo had dispatched more than 200 troops, transferred hundreds of trapped people and rushed to transport dozens of tons of materials.

At the same time, when it was learned that the ancient city of Linhai in the Millennium was in an emergency, the sailors in the East China Sea also rushed to help! On the afternoon of the 10th, after receiving the call for help, an oil technical support brigade of the Navy in the Eastern Theater immediately launched an emergency plan to prevent Taiwan and set up a water emergency rescue team. More than 20 officers and men arrived in the affected area in two batches that night, and immediately launched an intense rescue operation. Then, more than 100 officers and men of a brigade of naval aviation in the theater carried the necessary equipment and materials for rescue, and also rushed to the front line of rescue and disaster relief. The officers and men and the affected people worked together to tide over the difficulties.

"With the People’s Liberation Army, we ordinary people are very relieved! The boys have worked too hard … …” "Like our soldiers, you are the best!" "Thanks to the people’s liberation army! Thanks to the people’s heroes! " Similar praise, those days are endless. The brave and tenacious spirit of the officers and men of the naval forces stationed in Ningbo and their love for the people, regardless of their lives, have deeply touched the affected people, and they have praised their children. (Reporter Wang Xiaofeng correspondent Liu Jun Pan Li Shen Yang Photo courtesy of the troops stationed in Ningbo)